Innovation programs do not usually fail dramatically. They fade. Participation drops a little each campaign. Ideas sit in review longer than they should. Feedback gets vaguer. Leadership stops asking about it. One day you realize the program is technically still running but nobody really cares anymore, including you.

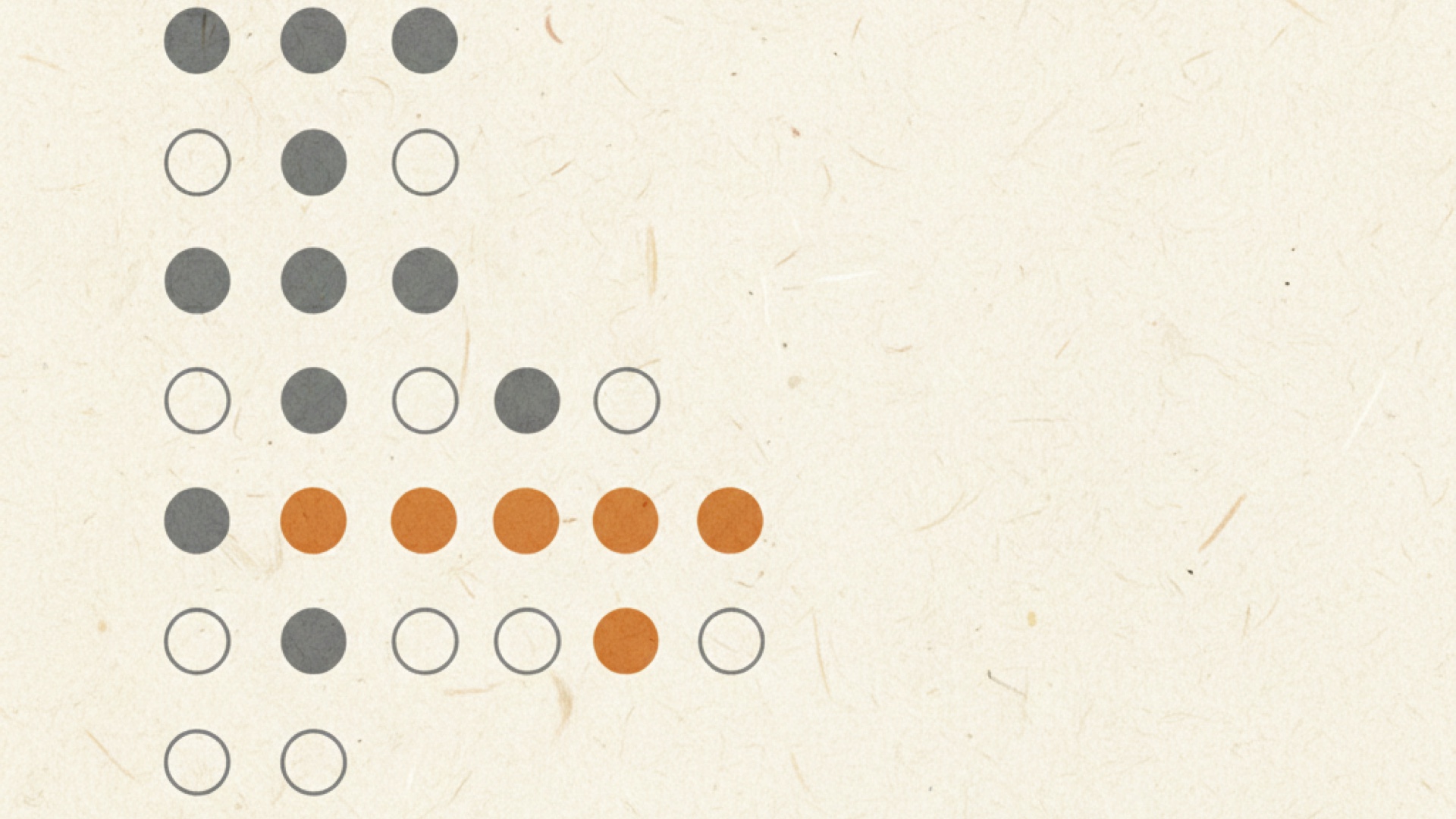

This diagnostic helps you find where the problem actually is before you try to fix it. Work through the questions honestly. A yes or mostly means that area is probably not the problem. A no or not really points you toward where to focus.

Section 1: The Setup (Before the Campaign)

1. Does your challenge question describe a specific problem, not a broad topic?

Yes means: someone could read the brief and know immediately whether they have something relevant to contribute. No means: the question is broad enough that it means different things to different people, and you will get unfocused submissions as a result.

If no: See the guide on writing an Idea Challenge that actually gets relevant ideas.

2. Do you specify what you are NOT looking for in your challenge brief?

Yes means: you have ruled out the obvious, the already-tried, and the out-of-scope. No means: a third of your submissions will be things you already know do not work, and your evaluation team will spend time declining them instead of reviewing the good ones.

If no: Add a not looking for section to your next brief.

3. Do you know who will evaluate submissions before the campaign launches?

Yes means: the evaluation team is identified, their time is roughly committed, and they understand the criteria. No means: the campaign will close and then you will spend two weeks figuring out who is supposed to review it.

If no: Name your evaluation team before you publish the next campaign.

4. Have you planned your audience before you launch, not after?

Yes means: you know which departments should be represented, you have thought about who to include that is not obvious, and you have a realistic estimate of participation. No means: you sent it to everyone, or to the first list that came to mind, and now you are surprised by the result.

If no: See the audience planning worksheet.

5. Does your launch communication explain what will happen with submissions?

Yes means: the launch email states a specific date by which participants will hear the outcome. No means: you launched with a vague we will get back to you and people already suspect they will not hear anything.

If no: See the communication template pack.

Section 2: During the Campaign

6. Do you send a mid-campaign update?

Yes means: participants hear something during the campaign that shows it is real and active. No means: there is a gap between the launch email and the reminder, and momentum dies in the silence.

If no: See the guide on keeping momentum during an active campaign.

7. Do you track which departments are participating in real time?

Yes means: you can see who has submitted and who has not, and you can do targeted outreach to underrepresented groups before the campaign closes. No means: you find out at the end that an important team did not participate, and it is too late.

If no: Build a participation tracker before your next campaign launches.

8. Do your reminders reference something specific from the campaign, not just the deadline?

Yes means: your reminder shows themes emerging, shares a strong submission, or explains what kind of ideas are still needed. No means: your reminder is a copy of the launch email with the deadline changed, and it does not motivate anyone who was not already planning to submit.

If no: See reminder Email 2 in the communication template pack.

Section 3: Evaluation

9. Does your evaluation team agree on criteria before they start reviewing?

Yes means: everyone is scoring against the same definition of impact, feasibility, and fit. No means: one reviewer is prioritizing cost savings, another is prioritizing ease of implementation, and a third is prioritizing political visibility, and none of them know it.

If no: See the idea scoring scorecard guide.

10. Do you have a triage process that separates initial sorting from detailed evaluation?

Yes means: your first pass is fast and uses simple criteria to sort, not to decide. Your detailed evaluation is reserved for the ideas that survive the first pass. No means: every idea gets the same level of scrutiny regardless of its merit, and your team runs out of energy before they finish reviewing.

If no: See the guide on triaging 100 or more ideas in two hours.

11. Can you typically complete evaluation within two weeks of a campaign closing?

Yes means: your process is efficient enough to keep momentum. No means: by the time you communicate an outcome, participants have already decided nothing will happen.

If no: The triage guide will help. Also review whether your evaluation team is the right size for the volume of submissions you are receiving.

12. Do you document why ideas were declined, not just which ones were?

Yes means: you can tell a submitter specifically why their idea did not move forward. No means: you decline things without a record of the reasoning, and your feedback to submitters is generic because you do not remember the specifics.

If no: Add a one-sentence reason field to your evaluation process.

Section 4: Feedback and Communication

13. Does every submitter receive individual feedback?

Yes means: nobody finishes a campaign wondering whether their idea was read. No means: some submitters hear nothing, and those are exactly the people who will not participate next time.

If no: See the guide on giving feedback that builds trust.

14. Does your feedback explain the actual reason for a decision, not just the outcome?

Yes means: a declined submitter understands why, not just that their idea was not selected. No means: you are sending variations of we cannot move forward at this time and people are rightfully frustrated by the vagueness.

If no: See the five feedback templates in the feedback guide.

15. Do you send an outcome communication to everyone, including people who did not submit?

Yes means: even non-participants know the program is producing results. No means: only submitters find out what happened, and the broader audience has no reason to believe the program is worth their time next campaign.

If no: Add a brief public summary to your post-campaign process. See Email 3 and Email 4 in the communication template pack.

Section 5: Implementation and Measurement

16. Does every selected idea have a named owner and a specific next step?

Yes means: ideas that are approved actually move. No means: ideas sit in selected status indefinitely because ownership was never clarified.

If no: Before the next evaluation session ends, agree that no idea leaves the room in approved status without a name and a next step attached to it.

17. Do you have a 30-day check-in process for ideas in implementation?

Yes means: you catch stalled ideas early and can unblock them or reset expectations. No means: ideas silently die in implementation and you find out six months later that nothing happened.

If no: See the 30-day after-action checklist.

18. Do you track implementation rate across campaigns?

Yes means: you know what percentage of the ideas you advance actually get implemented. No means: you are reporting submission counts as success metrics and have no way to know whether the program is actually working.

If no: See the guide on measuring your innovation program without lying to yourself.

19. Can you point to at least one concrete outcome from your program in the past six months?

Yes means: you have something real to show, even if it is small. A process change, a cost saving, a quality improvement. No means: the program is producing activity but not results, and it is probably only a matter of time before leadership questions its value.

If no: The problem is likely in evaluation (ideas are not getting selected) or implementation (ideas are getting selected but not acted on). The triage and scoring guides address the first. The after-action checklist addresses the second.

20. Do you report program outcomes to leadership at least quarterly?

Yes means: leadership knows the program exists, what it is producing, and what it needs. No means: the program runs in isolation and leadership will eventually question why it exists or cut it when budget pressure arrives.

If no: See the one-page innovation report for leadership.

Reading Your Results

If you answered no to questions in Section 1 (Setup): start here. Problems in setup cascade through everything that follows. You cannot fix low participation with better reminders if the challenge question is too vague to engage people.

If your Section 1 answers are mostly yes but Section 4 (Feedback) is mostly no: this is the most common pattern for programs that started strong and are now declining. The setup is fine. The feedback loop is broken. People submitted once, heard nothing meaningful, and stopped.

If your Section 5 (Implementation) answers are mostly no: ideas are being generated and selected but not acted on. This is an organizational problem, not a campaign problem. The ideas are working. The implementation infrastructure is not. That is a different conversation to have, and it is worth having explicitly with whoever controls the resources.

If you answered no to more than 10 questions overall: pick one section to fix at a time, starting with setup. Trying to fix everything at once usually means nothing gets fixed properly.